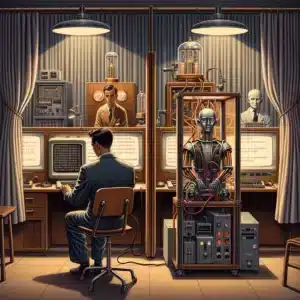

In 1950, Alan Turing proposed “The Imitation Game”, today known as the Turing test, as a hypothetical way of measuring whether a computer can think [1]. It stakes out the basic philosophical idea that “intelligence” should be judged as a behavioral attribute – if what we can observe about the behavior of a system is consistent with it being intelligent – then we should conclude that it is intelligent. This is in contrast with the alternative philosophical position that a system requires the right type of internal mechanisms to be considered intelligent, as argued by Searle [2]. To pin down this idea precisely, “The Imitation Game” outlines a specific experimental setup in which a human interrogator converses over a terminal with two witnesses – one human and one artificial – and has to identify which is which. If the interrogator is unable to do better than random guessing, then the computer passes the Turing test.

Today many people believe that GPT-4 would pass or already has passed the Turing test [3][4]. This week two researchers from UCSD, Cameron Jones and Benjamin Bergen, posted a paper [5] to arXiv with the results from their experiments running an online Turing test with 652 human participants, in a manner that is highly consistent with Turing’s “Imitation Game”. The interrogator has 5 minutes of conversation before making a determination [6]. They ran seven different GPT-4 “personas” (each with different prompting instructions) as well as two GPT-3.5 personas and Joseph Weizenbaum’s ELIZA [7].

The highest performing model (a GPT-4 persona) was able to fool human interrogators into thinking it is human 41% of the time. Human witnesses were able to convince the interrogator that they are human 63% of the time. Hence, the researchers conclude that GPT-4 does not (yet) pass the Turing test. Another surprising result was that the ELIZA, devised in 1966, came in 5th out of the 11 artificial personas, successfully deceiving the interrogator 27% of the time.

Today most people don’t consider the Turing test to be a literal criteria for intelligence. For example, failing a Turing test because the witness is judged to be too intelligent to be a mere human isn’t rationale for concluding that the witness is not intelligent. But with the Turing test now part of our shared vernacular, it is interesting to see the results from an earnest attempt to carry it out.

References

[1] Alan M. Turing (1950) “Computing Machinery and Intelligence”, Mind, 59(236):433-460.

[2] John R Searle (1980), “Minds, brains, and programs”, The behavioral and brain sciences.

[3] Celeste Bievere (2023), “ChatGPT broke the Turing test – the race is on for new ways to assess AI,” Nature.

[4] Alyssa James (2023), “ChatGPT has passed the Turing test and if you’re freaked out, you’re not alone,” TechRadar.

[5] Cameron Jones and Benjamin Bergen, “Does GPT-4 pass the Turing test?” arXiv 2310.202116v1.

[6] Try it yourself at https://turingtest.live.

[7] Joseph Weizenbaum (1966). “ELIZA—A Computer Program For the Study of Natural Language Communication Between Man And Machine.” Communications of the ACM, 9(1), 36–45