The challenge

Numerous people have argued that AI might pose catastrophic or existential risks for the future of humanity. It is unclear whether we can design controls to ensure that high-level machine intelligence (HLMI) will align with human goals (the “alignment problem) –or whether an authoritarian human might use HLMI to dominate the rest of humanity. Notable books on the topic are Super intelligence by Nick Bostrom (Oxford UP, 2014) and Human Compatible by Stuart Russell (Viking, 2019).

However, these arguments vary in important ways and key assumptions have been subject to extensive question and dispute. How can we clarify the critical disputed assumptions (the cruxes) of these arguments and understand their implications? A group of seven AI Researchers engaged in the Modeling Transformative AI Risks (MTAIR) Project to grapple with these challenges.

Why Analytica?

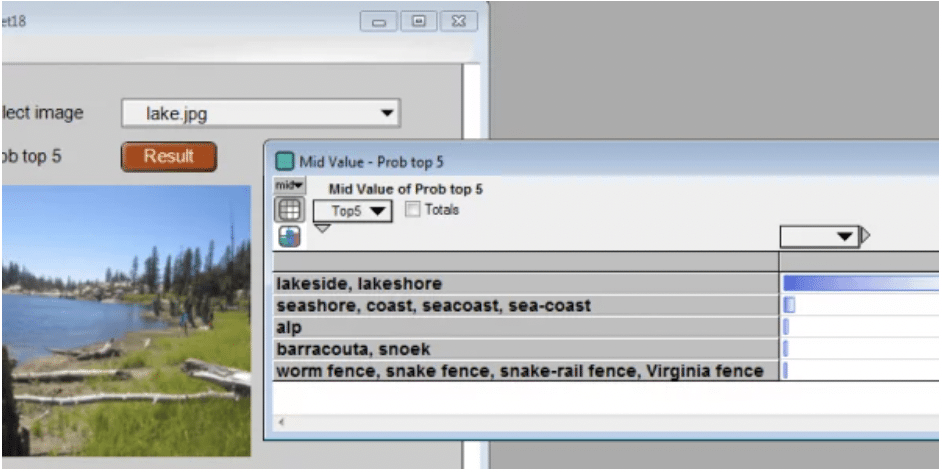

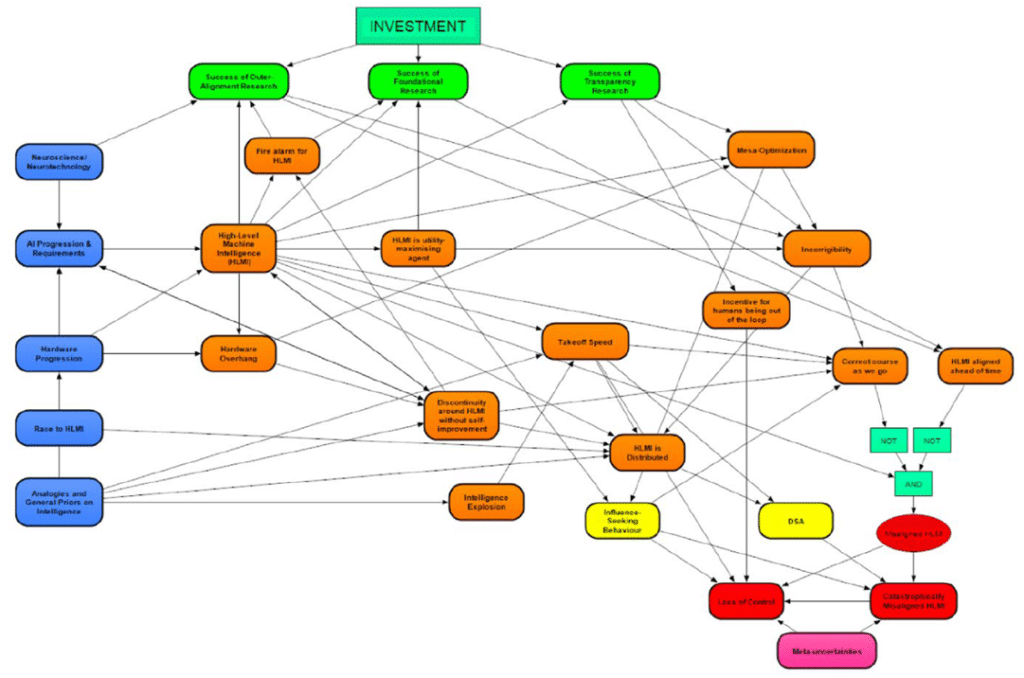

The project team chose to use Analytica to draw influence diagrams to represent the elements of the scenarios and arguments for the first phase of research. In the second phase of research now underway, they aim to extend the analysis by quantifying selected forecasts, risks, scenarios and the effects of interventions – using probability distributions to express uncertainties.

The solution

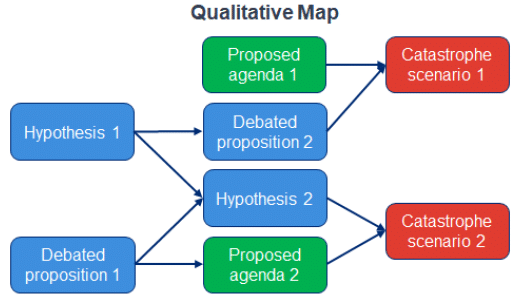

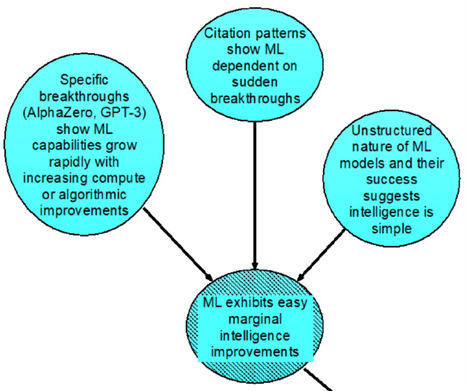

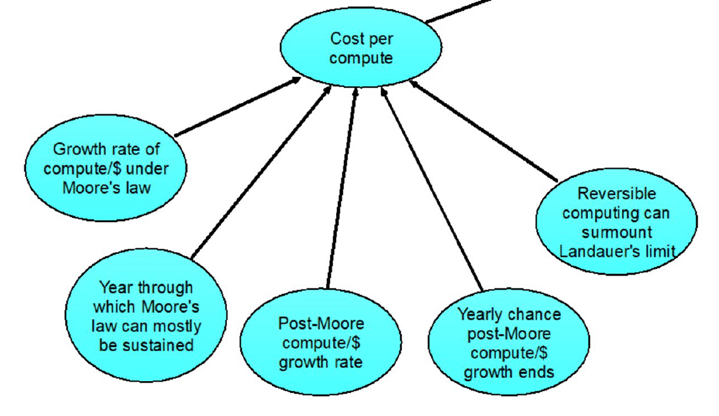

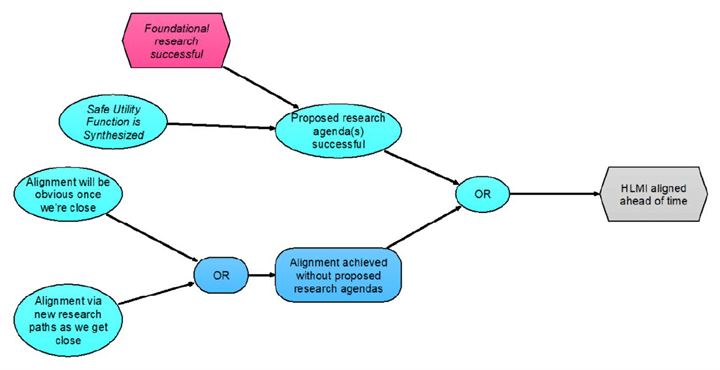

The research team organized this work into sections represented by Analytica modules. They divided their exploration into seven chapters plus an introduction, each with primary co-author. Chapters include Analogies and General Priors on Intelligence, Paths to High-Level Machine Intelligence, Takeoff Speeds and Discontinuities, Safety Agendas, Failure Modes, and AI Takeover Scenarios.

The overview diagram showing the key modules. The red modules at the bottom identify potential catastrophic scenarios. The green Investment node at the top depicts the decision on what kind of research to fund that is most likely to prevent catastrophic outcomes and produce HLMI aligned with human objectives.

The report sketches the team’s initial thoughts about the structure of risks from advanced AI. They argue that it is critical to break down the conceptual models discussed by AI safety researchers into “pieces that are each understandable, discussable and debatable.”

Authors

The initial qualitative analysis was carried out by a team of researchers on AI risks, including Sam Clarke, Ben Cottier, Aryeh Englander, Daniel Eth, David Manheim, Samuel Dylan Martin, and Issa Rice, resulting in the report Modeling Transformative AI Risks (MTAIR) Project.

Aryeh Englander in collaboration with others, including Lonnie Chrisman and Max Henrion (CTO and CEO of Lumina) is continuing this work in an attempt to quantify some elements of the forecasts, risks, and impact of interventions.

Acknowledgements

This work was funded by the Johns Hopkins University Applied Physics Laboratory (APL), the Center for Effective Altruism Long-Term Future Fund. Aryeh Englander received support from the Johns Hopkins Institute for Assured Autonomy (IAA).

Additional resources

Notable books on the topic are Super intelligence by Nick Bostrom (Oxford UP, 2014) and Human Compatible by Stuart Russell (Viking, 2019).