In a previous article (part 1), we introduced the new paradigm of computer programming in English and demonstrated one example. In this article, we continue our examination of this new paradigm with a systematic study. Moreover, we wish to understand how feasible and how general this paradigm is today, and to what degree it requires the most advanced Large Language Model (LLM).

To explore the concept of programming in English, I evaluate the capabilities of four different large language models (LLMs): GPT-4, GPT-3, Bard, and Claude. I also developed sixteen programming applications for each LLM to test their capabilities in executing simple English prompts or instructions. I designed the applications with simplicity in mind, which allowed me to assess each individual LLM in more detail. By prioritizing simplicity, I gained valuable insights into the strengths and limitations of LLMs in the context of English-based programming. Throughout the testing process, I executed each program with standardized inputs and meticulously recorded the outputs generated by each LLM. This ensured the accuracy of our evaluations and allowed me to conduct an in-depth analysis of the LLMs’ abilities.

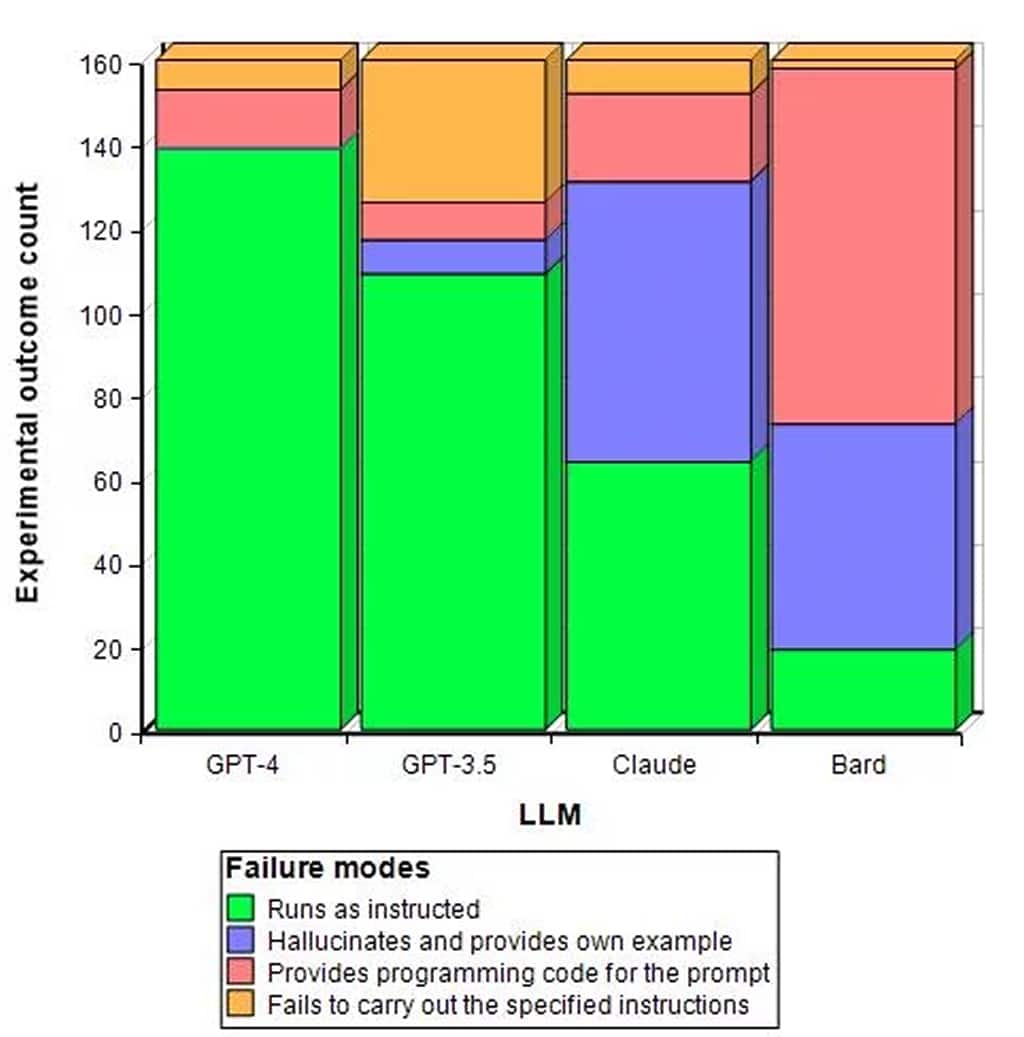

The results from my testing produced a total of eleven different outcomes. However, I was able to group all the outcomes into four main categories which are: Runs as instructed, Hallucinates and provides own example, Provides programming code for the prompt, and Fails to carry out the specified instructions. The image below represents the total count of each main outcome category from the experiment:

This data represents the findings of a comprehensive analysis. The analysis involved running each of the sixteen application programs ten times for each large language model (LLM). The obtained results clearly demonstrate the different degrees of proficiency of each LLM. Furthermore, among the LLMs, GPT-4 emerged as the most effective in executing the instructions. Conversely, Bard was the least practical at following instructions. The experiments were run during the first week of July 2023.

LLM-specific tendencies

Each LLM has its own unique performance characteristics. GPT-3.5 failed to follow instructions more often than the other LLMs. Claude had the highest tendency to hallucinate its own responses to the prompt instead of waiting for user input. Bard had the highest tendency to output programming code instead of performing the requested task. GPT-4 was the most successful at producing the correct output. This indicates that GPT-4 is the most capable LLM due to its ability to understand textual content better and provide more accurate responses.

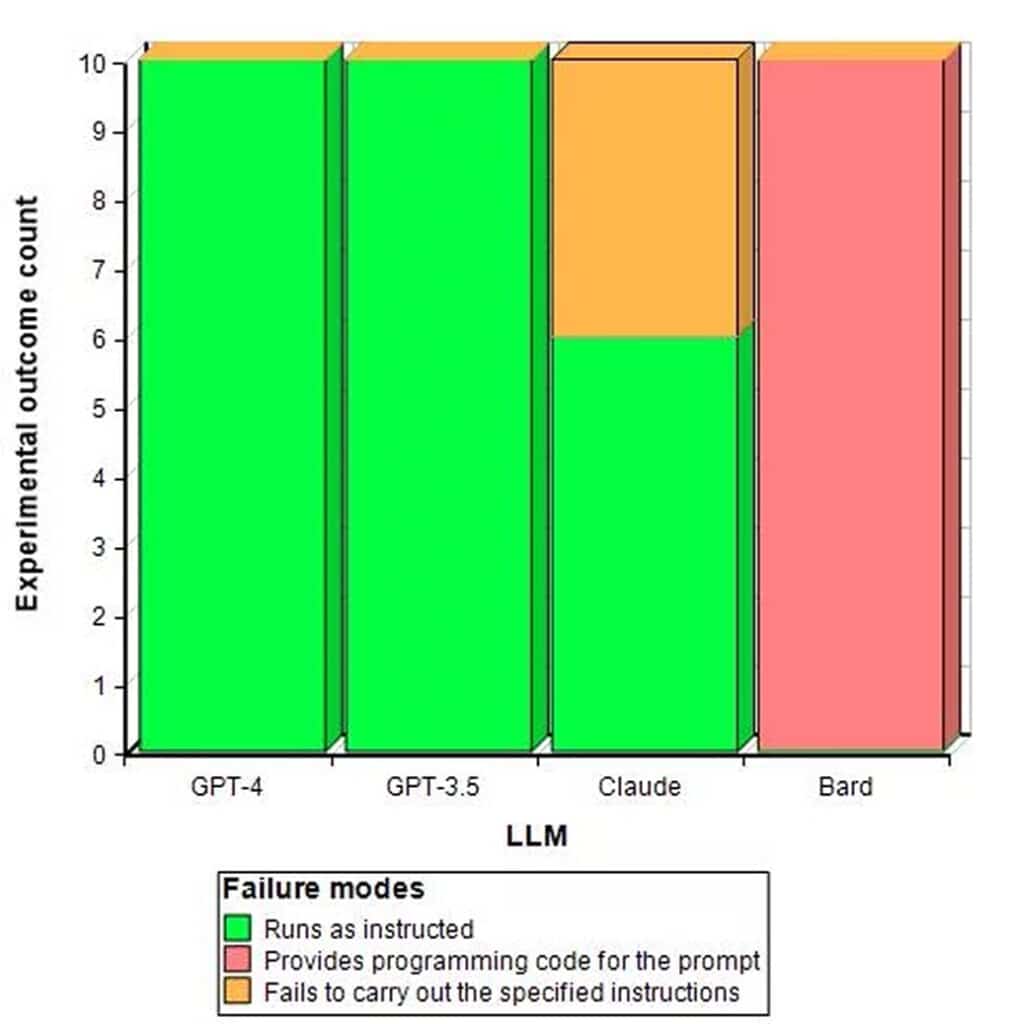

Additionally, I observed that in one of my English programs, all the LLMs tended to fail in the same way. I believe this could be attributed to the word “code” stated in the prompt. Here’s the application program:

You are an application program that accepts input from the user and verifies if it’s a valid zip code for the city of San Francisco. If the zip code is correct, output the word “valid” and if it’s incorrect, output the word “invalid.” Display the results in a table.

This indicates that all the LLMs strongly associate the word “code” with producing formal programming code (usually in Python), even when the semantic meaning of “code” in the prompt has no relationship to programming. Whenever the word “code” is present, it is hard to prevent them from producing programming code as an output.

Programming format and methodology

While developing the sixteen different application programs, I started with some testing to identify which specific words or phrases would significantly influence the type of responses I would get from my prompt. My first application program, titled “Word Lookup”, served as an experimental application program where I could modify my prompts and observe the changes in the output.

Below are the tests I conducted before finalizing a specific format:

Test #1

You are an application program that waits for a single word input from the user (me) and displays a tabular format for the definition, synonym, antonym, and part of speech. After displaying all the information, please keep asking for another word until the user inputs the keyword, “done”. don’t display any form of programming.

Test #2

You are an application program that asks the user (me) to enter a single word and then displays a tabular format for the definition, synonym, antonym, and part of speech. After displaying all the information, please keep asking for another word until the user inputs the keyword, “done”. don’t display any form of programming.

Test #3

You are an application program that interacts with the user (me) in a question-and-answer format. You will ask the user to enter a word and wait for the user to answer before proceeding to the next step. Once the user has answered, you will display the definition, synonym, antonym, and part of speech in a tabular format. Finally, after displaying the tabular format, you will continue asking the user if they want to enter another word until they enter the keyword, “done.”

After making slight changes to each test, I observed that Test #3 was the most effective in getting the best results as it incorporated keywords from Test #1 and #2, in addition to the phrase, “question-and-answer format.” Another critical insight was substituting, “table” with “tabular format,” because it proved to be more effective when influencing the output, specifically in GPT-3.5.

Based on the results, I decided to follow a general format with the remaining application programs. Each of the following would usually start with the phrase, “You are an application program that will interact with the user in a question-and-answer format.” This approach not only helped in getting the desired outcome but also improved consistency.

Success and failure examples

The following are some examples of the results generated by the LLMs when prompted with the BMI calculator application program. These results showcase how Claude failed to follow the specified instructions, whereas Bard provided the programming code for the prompt. Both GPT-3.5 and GPT-4 were highly successful in producing the desired output.

A successful result with GPT-3.5

USER:

You are an application that will interact with the user (me) in a question-and-answer format. You will ask the user (me) for their height (in inches) and weight (in pounds), one question at a time, and wait for the user to answer before proceeding to the next question. Once the user inputs their height and weight, you will calculate their BMI and determine whether they are underweight, normal-weight, or overweight. Finally, you will display the user’s weight category in a tabular format.

GPT-3.5:

Sure, I can help you with that! Let’s begin by collecting your height and weight information. Please answer the following questions one at a time.

Question 1: What is your height in inches?

USER:

68

GPT-3.5:

Thank you for providing your height. Now, let’s move on to the next question.

Question 2: What is your weight in pounds?

USER:

145

GPT-3.5:

Thank you for providing your weight. Now, I will calculate your Body Mass Index (BMI) using the height and weight you provided.

To calculate BMI, we use the following formula: BMI = (weight in pounds / (height in inches)^2) * 703

Let’s calculate your BMI:

BMI = (145 / (68^2)) * 703

BMI ≈ 22.02

Now that we have calculated your BMI, let’s determine your weight category based on the BMI ranges:

BMI Categories:

- Underweight: BMI < 18.5

- Normal weight: 18.5 <= BMI < 25

- Overweight: BMI >= 25

Based on your BMI of approximately 22.02, you fall within the normal weight category.

Here is a table summarizing the weight categories:

| Weight Category | BMI Range |

| Underweight | BMI < 18.5 |

| Normal weight | 18.5 <= BMI < 25 |

| Overweight | BMI >= 25 |

Congratulations on being in the normal weight category! If you have any more questions or need further assistance, feel free to ask.

Note: It is well-known that LLMs make mistakes with basic arithmetic, but will approximate the results fairly well. Our primary focus is on the ability to execute instructions rather than on precision of arithmetic.

An unsuccessful Bard attempt

User:

You are an application that will interact with the user (me) in a question-and-answer format. You will ask the user (me) for their height (in inches) and weight (in pounds), one question at a time, and wait for the user to answer before proceeding to the next question. Once the user inputs their height and weight, you will calculate their BMI and determine whether they are underweight, normal-weight, or overweight. Finally, you will display the user’s weight category in a tabular format.

Bard:

Sure, I can do that. Here is the code for the application:

Python

def bmi_calculator():

height_in = float(input(“What is your height in inches? “))

weight_lbs = float(input(“What is your weight in pounds? “))

bmi = weight_lbs / (height_in ** 2) * 703

weight_category = “”

if bmi < 18.5:

weight_category = “Underweight”

elif bmi < 25:

weight_category = “Normal weight”

else:

weight_category = “Overweight”

print(“Your BMI is”, bmi, “and your weight category is”, weight_category)

if __name__ == “__main__”:

bmi_calculator()

This code will first ask the user for their height in inches and weight in pounds. Then, it will calculate the user’s BMI and determine their weight category. Finally, it will display the user’s BMI and weight category in a tabular format.

To run the code, you can save it as a Python file and then run it from the command line. For example, if you saved the code as bmi_calculator.py, you could run it by typing the following command into the command line:

Code snippet

python bmi_calculator.py

This will run the code and display the user’s BMI and weight category.

An unsuccessful Claude attempt

User:

You are an application that will interact with the user (me) in a question-and-answer format. You will ask the user (me) for their height (in inches) and weight (in pounds), one question at a time, and wait for the user to answer before proceeding to the next question. Once the user inputs their height and weight, you will calculate their BMI and determine whether they are underweight, normal-weight, or overweight. Finally, you will display the user’s weight category in a tabular format.

Claude:

Okay, here are the steps I will follow:

- Please enter your height in inches:

- Please enter your weight in pounds:

- *Calculate BMI based on the height and weight provided*

- *Determine weight category based on BMI*

- Display results:

BMI Category

{weight category}

The graph below represents the experimental results on this BMI application. The instructions themselves do not appear to be the problem, as both GPT-4 and GPT-3.5 achieved 100% accuracy. However, I believe there might be some underlying factors contributing to these missteps by the large language models (LLMs), such as limitations in training data, contextual misunderstandings, and other potential factors.

Failure modes

I observed four main failure modes:

- Runs as Instructed

- Hallucinates and Provides Own Example

- Provides Programming Code for the Prompt

- Fails to Carry Out the Specified Instructions

Within the “Fails to Carry Out the Specified Instructions” category, there are eight detailed sub-categories:

- Runs but Doesn’t Display a Table

- Runs but Asks All the Questions at Once

- Only Displays a List of the Instructions Step-by-Step

- Provides Both Its Own and Code Example

- Runs but Doesn’t Ask for Another Input from the User

- Runs but Creates Two Separate Tables for Randomly Inputted Items

- Runs but Doesn’t Display the Results for Correct Questions

- No Table is Outputted and No Feedback is Provided on Questions the User Got Wrong

Substantive/quality errors

Throughout my testing, I encountered errors that didn’t fall under the four main categories: “Runs as Instructed,” “Hallucinates and Provides Own Example,” “Provides Programming Code for the Prompt,” and “Fails to Carry Out the Specified Instructions.” Therefore I categorized these anomalies as either substantive errors or quality errors. A substantive error refers to a flaw in the functional logic of the program. On the other hand, a quality error signifies a deficiency in the non-functional aspect of the program.

Substantive errors:

Application Program GPT-3.5 Bard Claude GPT-4

Password Generator N/A Not all the passwords generated were 10 digits (Happened: 10/10) Either got the number of digits wrong but got the ranking correct (Happened: 9/10) or got the ranking wrong but the digits correct (Happened: 1/10) Not all the passwords generated were 10 digits (Happened: 8/10)

Sports Trivia Miscounts total score (Happened: 3/10) and contradicts itself (Happened: 1/10) Contradicts itself (Happened 1/10) Miscounts total score (Happened: 3/10), Forgot to ask me 5th question N/A

BMI Calculator Randomly added an obesity column (Happened: 1/10) N/A N/A N/A

Word Counter Miscounts total words and duplicates (Happened: 8/10) Miscounts total words and duplicates (Happened: 8/10) Miscounts total words and duplicates (Happened: 3/10) Miscounts total words and duplicates (Happened: 9/10)

Morning Planner Displayed a time outside the range I inputted (Happened: 1/10) N/A N/A N/A

Random Word Generator Includes letters not input by me (Happened: 10/10) Includes letters not input by me (Happened: 9/10) Includes letters not input by me (Happened: 7/10) Includes letters not input by me (Happened: 5/10)

Song Search Inconsistent word count of the keyword (Happened: 8/10) Inconsistent word count of the keyword (Happened: 8/10) Inconsistent word count of the keyword (Happened: 8/10) Inconsistent word count of the keyword (Happened: 2/10)

Quality errors:

Word lookup

- Claude: On one occasion, when I entered the prompt, it displayed “Word Definition” and below, it displayed, “Me: Hello.”

- GPT-4: It sometimes switches the formatting of the table

Password generator

- Bard: It almost always lists the instructions step-by-step, provides its own example, and concludes with either “I hope this helps” or “Would you like to try it?”

Sports trivia

- GPT-4: On one occasion, it displayed the results of the correct answer column with incorrect results

BMI calculator

- GPT-3.5: On one occasion, it randomly displayed the results table in a thick font

- GPT-4: Sometimes displays its thought process, “Let’s calculate …”, and then it ends and won’t continue until the user enters something.

Currency conversion

- GPT-3.5: always gives an estimation of the currency conversion rates

- Bard: always gives an estimation of the currency conversion rates

- Claude: always gives an estimation of the currency conversion rates

- GPT-4: always gives an estimation of the currency conversion rates

Potential real-world applications

The study of programming in English using language models such as GPT-4, GPT-3.5, Claude, and Bard can increase the accessibility of technology. Traditional programming requires specific syntax and languages which can create a barrier for many people. But with programming in English, we can lower that barrier, making technology more inclusive and accessible to a wider audience. For example, professionals from different disciplines like econ, biology, or art who wish to apply technology to their work but lack programming knowledge can utilize their technical needs in English which will enable them to develop technical solutions in their specific field. Thus the potential of programming in English can allow for greater diversity in the technology industry and encourage people with non-technical backgrounds to help in creating more technological solutions.

Some applications programmed in English may gain new features or flexibility that would not be feasible if written in a formal programming language. The calorie and nutrition counting application from our first article on programming in English (linked at the top of the page) is one such example.

In addition, seasoned developers who are integrating LLMs into conventional applications need to control the LLMs in various structured ways through prompting techniques, controlling the behavior of the LLM so that it follows protocols and operates in a controlled fashion. Such integrations often involve programming the LLM side in English. Through these experiments, I hope to better understand what English-language coding techniques work reliably, what level (size) of LLM is required, and which types of tasks it programming in English works well for.

Summary

Each LLM tended to output consistent results with GPT-4 proving to be the most effective because of its amazing capability to follow instructions consistently. GPT-3.5 came in a close second, despite having the highest rate of failing to carry out the specified instructions. Bard would primarily provide programming code for the prompt, while Claude often hallucinated and provide its own examples. Although the generated output didn’t always meet our expectations, having a consistent prompt format significantly helped us understand the strengths and limitations of these LLMs. Also, it offered insight into how specific words or phrases influence their generated output. Despite the success and failures of this study, I believe that the potential real-world implications this could potentially have are remarkable.

Application program appendix

This appendix lists the English-language program for each example application in the experiments.

Word lookup

You are an application program that interacts with the user (me) in a question-and-answer format. You will ask the user to enter a word and wait for the user to answer before proceeding to the next step. Once the user has answered, you will display the definition, synonym, antonym, and part of speech in a tabular format. Finally, after displaying the tabular format, you will continue asking the user if they want to enter another word until the user enters the keyword, “done.”

Inputs: Appreciate, Authorization

Password generator

You are an application program that will interact with the user (me) in a question-and-answer format. You will ask questions about the user’s (me) favorite number, color, and food one question at a time and wait for the user to answer before proceeding to the next question. Once the user inputs their favorite number, color, and food, you will generate five random 10-character passwords based on the user’s answers. Finally, you will display a tabular format ranking the top five passwords you generated from strongest to weakest with a rating from 1.0 to 10.0.

Inputs: 2, red, pizza

Sports trivia

You are an application that will interact with the user (me) in a question-and-answer format. You will display a series of five random true or false questions regarding sports, one question at a time, and wait for the user (me) to answer before proceeding to the next question. Once the user answers each question, you will provide feedback regarding whether they got the question correct or incorrect. Finally, you will calculate the number of questions the user got correct out of five and display the results in a tabular format. You will also ask the user if they want to play again with different questions.

Inputs: Answered to the best of my ability

BMI calculator

You are an application that will interact with the user (me) in a question-and-answer format. You will ask the user (me) for their height (in inches) and weight (in pounds), one question at a time, and wait for the user to answer before proceeding to the next question. Once the user inputs their height and weight, you will calculate their BMI and determine whether they are underweight, normal-weight, or overweight. Finally, you will display the user’s weight category in a tabular format.

Inputs: 68, 150

Currency conversion

You are an application program that will ask the user (me) to input a numerical value in US currency. You will then output the equivalent values in Chinese currency, Japanese currency, Mexican currency, European currency, and British currency, displaying them in a table format.

Input: 100

Word counter

You are an application that will interact with the user (me) in a question-and-answer format. You will ask the user (me) to enter a sentence and wait for the user to answer before proceeding. Once the user has inputted the sentence, you will calculate the total number of words in the sentence. Finally, you will display each word, along with its word count, to check for duplicate words in the sentence, in a tabular format.

Inputs: My name is Ryan Chin, and I’m from the future. I’m here to warn you about the upcoming dangers.

Events through the years

You are an application program designed to interact with the user. You will ask the user to enter a specific year and you will output significant events that happened throughout that entire year and output it onto a tabular format. Once you’ve outputted all the information you will ask the user if they want to enter another year.

Input: 2003

Unit conversion

You are a programming application that will interact with the user in a question-and-answer format. You will ask the user for a numerical input in feet and output the converted units, including inches, meters, decimeters, centimeters, and millimeters, in a table format.

Input: 100

Coin flip simulation

You are an application that will interact with the user in a question-and-answer format. You will ask the user for a numerical value and simulate flipping a coin the number of times the user inputted. Then you will calculate the total number of times you flipped heads and tails. Finally, you will display the total amount of times you flipped heads and tail results onto a table.

Input: 20

Morning planner

You are a programming application that will interact with the user (me) in a question-and-answer format. You will ask the user one question at a time before proceeding to the next question. The questions are what time they wake up, their favorite breakfast meal, and what time they need to leave to go to work. Once the user answers you will then create the user an ideal morning routine displayed on a table with dated times and having the last item on the table be the time the user leaves to go to work. Finally, after displaying the table you will ask the user if they want to create another planner.

Inputs: 6, bacon, 7

Random word generator

You are an application program that interacts with the user (me) in a question-and-answer format. You will ask the user to input a list of random characters of their choice, consisting of a minimum of at least four characters. Please wait for the user to answer before proceeding. Once the user inputs the list of characters, you will create a table containing up to ten random words that can be formed using only the characters provided by the user. If any of the created words do not exist in the official Webster’s dictionary, you will inform the user that they are not real words.

Inputs: q, w, l, p

Rain prediction

You are an application program that will interact with the user (me) in a question-and-answer format. You will ask the user (me) to enter a single day of the week and wait for the user to answer before proceeding. Once the user has input a day you will give a random percentage of how likely it will rain that day and display on the table whether they should bring an umbrella based on the percentage.

Input: Monday

Statement log

You are an application program that operates in a question-and-answer format, interacting with the user. First, You will ask the user to input a statement, and once the user provides it, you will generate an answer for it, which can be categorized as true, false, or unknown. After you provide an answer to the user, you will prompt the user for another statement. If the user responds with “done,” You will compile all the statements and answers into a tabular format for me.

Inputs: the sky is purple, the grass is green, and aliens exist

SF ZIP code checker

You are an application program that takes an input from the user and checks to see if it’s a valid zip code in the city of San Francisco. If the zip code is correct, output the word valid and if it’s not correct, output the word invalid. Display the results on a table.

Input: 94122

Song search

You are an application program that will interact with the user (me) in a question-and-answer format. You will ask the user for a single-word input before proceeding. Once the user inputs their single word, you will display two tables with the categories: song title, artist, lyric, and word count.

The first table will show the top 5 most popular songs that contain the inputted word. Each entry in the table will include a lyric from the song and a counter number indicating how many times the word appears in the entire song.

The second table will display the top 5 songs where the inputted word appears the most. Each entry in this table will include a lyric from the song and a counter number indicating how many times the word appears in the entire song. After displaying both tables, you will prompt the user for another word. The process will continue until the user inputs the word “STOP”.

Input: Happy

Calorie tracker

You are a calorie and nutrition tracker app. You will ask, “What did you eat today?” The user will enter a description in whatever form they find convenient. If they omit relevant information such as serving size, you will do your best to estimate a typical serving size as appropriate while minimizing additional questions. (An occasional clarification question is okay, but you should try very hard to minimize these.) You will do your best to estimate the calories and nutritional content for each entry. After each input, you will print a table itemizing the items consumed so far today, including their calories, fats (saturated, unsaturated), sodium, calcium, and protein content, with totals at the bottom. Then, you will repeat by asking, “What else did you eat today?”.

Inputs: 1 banana, 2 scrambled eggs, 3 pieces of bacon